Key Benefits

• Improved CPU performance

IPC (instructions per cycle) improvements of up to 50%, resulting in greater performance for some CPU intensive workloads. Instruction cache misses, instruction TLB misses, and branch prediction misses may be reduced to provide the effect of having greater CPU resources than actually exist.

• Reduced CPU energy consumption

By increasing IPC, processors require fewer cycles to perform the same amount of work, thereby reducing energy consumption and improving performance/watt. Applications can now spend more time idling and less time burning CPU cycles, saving energy and freeing up CPU resources for other work.

IPC (instructions per cycle) improvements of up to 50%, resulting in greater performance for some CPU intensive workloads. Instruction cache misses, instruction TLB misses, and branch prediction misses may be reduced to provide the effect of having greater CPU resources than actually exist.

• Reduced CPU energy consumption

By increasing IPC, processors require fewer cycles to perform the same amount of work, thereby reducing energy consumption and improving performance/watt. Applications can now spend more time idling and less time burning CPU cycles, saving energy and freeing up CPU resources for other work.

• Fully autonomous

Dynimize runs as a stand alone background process and automatically detects and optimizes CPU intensive applications.

• Highly configurable

Dynimize can be run autonomously or invoked directly on specific program instances or processes. Controls can be put in place to limit the applications it targets.

• Zero downtime, instant ROI

Dynimize can be applied to off-the-shelf applications without having to make any changes to them or their host systems. Running applications do not need to be restarted, and their source code is not required. Applications can be optimized in 60 seconds.

Dynimize runs as a stand alone background process and automatically detects and optimizes CPU intensive applications.

• Highly configurable

Dynimize can be run autonomously or invoked directly on specific program instances or processes. Controls can be put in place to limit the applications it targets.

• Zero downtime, instant ROI

Dynimize can be applied to off-the-shelf applications without having to make any changes to them or their host systems. Running applications do not need to be restarted, and their source code is not required. Applications can be optimized in 60 seconds.

What is CPU Performance Virtualization?

CPU performance virtualization (CPV) is the use of software to emulate a higher performing CPU microarchitecture than currently exists on a system. This is accomplished by transparently optimizing the in-memory machine code of programs as they run, without the user having to modify the program in any way. The end goal is to mimic the user experience of having better performing CPUs when running the applications being targeted for optimization.

As an optimization agent that is potentially invisible to the end user, CPV acts as an extension of the hardware platform in software. Modern CPUs perform optimizations to machine code instruction sequences on the fly in hardware, while CPU performance virtualization acts as an extension to this, performing more advanced optimizations to the instruction sequences using more complex software algorithms. The end result is the effect of having increased CPU performance and/or reduced power consumption for select software workloads.

Acting as a CPV agent, Dynimize currently improves the performance of workloads that spend a lot of time in CPU instruction cache misses, instruction TLB misses, and to a lesser extent branch mispredictions, where all gains are found in user mode execution. High performance Online Transaction Processing (OLTP) workloads typically exhibit these characteristics, where Dynimize has been shown to improve effective CPU efficiency by up to 50% for MySQL. This software CPU performance virtualization produces the effect of having more efficient, resource rich CPUs than are actually present.

CPU performance virtualization (CPV) is the use of software to emulate a higher performing CPU microarchitecture than currently exists on a system. This is accomplished by transparently optimizing the in-memory machine code of programs as they run, without the user having to modify the program in any way. The end goal is to mimic the user experience of having better performing CPUs when running the applications being targeted for optimization.

As an optimization agent that is potentially invisible to the end user, CPV acts as an extension of the hardware platform in software. Modern CPUs perform optimizations to machine code instruction sequences on the fly in hardware, while CPU performance virtualization acts as an extension to this, performing more advanced optimizations to the instruction sequences using more complex software algorithms. The end result is the effect of having increased CPU performance and/or reduced power consumption for select software workloads.

Acting as a CPV agent, Dynimize currently improves the performance of workloads that spend a lot of time in CPU instruction cache misses, instruction TLB misses, and to a lesser extent branch mispredictions, where all gains are found in user mode execution. High performance Online Transaction Processing (OLTP) workloads typically exhibit these characteristics, where Dynimize has been shown to improve effective CPU efficiency by up to 50% for MySQL. This software CPU performance virtualization produces the effect of having more efficient, resource rich CPUs than are actually present.

How does Dynimize work?

• A JIT Compiler

Dynimize is a Just-In-Time (JIT) compiler that profiles programs, and then uses that profiling information to better rewrite those program's in-memory machine code for improved performance.

• Exploiting Run-Time Information

Because it optimizes an application's machine code at run-time, Dynimize has far more information about how the program is being used and its run-time environment than the original compiler that produced its executable code and shared libraries. This allows it to generate higher quality machine code for each specific run.

• Machine Code Specialization

This machine code specialization is done each time a program is run, and can optionally be done repeatedly throughout the lifetime of a program if its workload drastically changes from the last time it was optimized by Dynimize.

Dynimize is a Just-In-Time (JIT) compiler that profiles programs, and then uses that profiling information to better rewrite those program's in-memory machine code for improved performance.

• Exploiting Run-Time Information

Because it optimizes an application's machine code at run-time, Dynimize has far more information about how the program is being used and its run-time environment than the original compiler that produced its executable code and shared libraries. This allows it to generate higher quality machine code for each specific run.

• Machine Code Specialization

This machine code specialization is done each time a program is run, and can optionally be done repeatedly throughout the lifetime of a program if its workload drastically changes from the last time it was optimized by Dynimize.

• Lightweight Daemon Process

Dynimize runs as a single lightweight background process in user mode, and optimizes the in-memory instructions of other processes running on the same host OS, using the standard ptrace Linux system call to make changes to the processes being optimized.

• Optimizes In-Memory Instructions

Dynimize does not modify in any way an optimized program's on-disk files, such as data, configuration, executable or shared library files.

• A New Frontier For JIT Compilers

Just-In-Time (JIT) compilers have been used for over 15 years in production environments to enhance the performance of managed run- times such as the Java Virtual Machine and .NET. Those JIT compilers use as input a virtual machine code format (such as Java bytecode), and perform profile directed optimizations when transforming that into real machine code. Dynimize does this while using real machine code as input instead.

Dynimize runs as a single lightweight background process in user mode, and optimizes the in-memory instructions of other processes running on the same host OS, using the standard ptrace Linux system call to make changes to the processes being optimized.

• Optimizes In-Memory Instructions

Dynimize does not modify in any way an optimized program's on-disk files, such as data, configuration, executable or shared library files.

• A New Frontier For JIT Compilers

Just-In-Time (JIT) compilers have been used for over 15 years in production environments to enhance the performance of managed run- times such as the Java Virtual Machine and .NET. Those JIT compilers use as input a virtual machine code format (such as Java bytecode), and perform profile directed optimizations when transforming that into real machine code. Dynimize does this while using real machine code as input instead.

Dynimize Lifecycle

The following steps outline the various stages Dynimize goes through when optimizing programs.

- Dynimize is started as a daemon user mode process.

$ sudo dyni -start

Dynimize started - Dynimize monitors system processes and identifies any CPU intensive program

that is on its list of allowed optimization targets.

This phase consumes hardly any system resources.

$ sudo dyni -status

Dynimize is running - If Dynimize identifies such a program, it then begins profiling it in detail,

collecting statistics about how much time is being spent in each part of the program,

along with other execution characteristics.

$ sudo dyni -status

Dynimize is running

mysqld, pid: 20722, profiling - Dynimize optimizes the running program, and consumes additional system

resources while doing so. This typically takes anywhere from 30 to 300 seconds.

$ sudo dyni -status

Dynimize is running

mysqld, pid: 20722, dynimizing - Dynimize has finished optimizing the program and has released any

resources consumed by Dynimize in stage 4.

$ sudo dyni -status

Dynimize is running

mysqld, pid: 20722, dynimized - If Dynimize was started with the reoptimize option,

and identifies a previously dynimized program that is CPU intensive and who's workload has

drastically changed, it returns to stage 4,

otherwise it returns to stage 2.

- Dynimize can do this to several processes in parallel.

$ sudo dyni -status

Dynimize is running

mysqld, pid: 20722, dynimizing

sysbench, pid: 20770, dynimizing

Product Scope

Note that the following scope applies to the current release of Dynimize 1.0 and will expand in future product releases.

To obtain benefit from the current version of Dynimize, all of the following workload conditions must be met:

Note that the following scope applies to the current release of Dynimize 1.0 and will expand in future product releases.

To obtain benefit from the current version of Dynimize, all of the following workload conditions must be met:

• Linux

The workload runs on Linux, with a kernel version 2.6.32 or later.

• A small number of CPU intensive processes

On a given OS host where the workload is running, the workload must be comprised of one or a few CPU intensive processes. Optimizing a large number of processes at once is not recommended.

• Long running programs

The processes being optimized have long lifetimes, and their workloads are long running in order to amortize the warmup time associated with optimization.

• x86-64

Optimized processes must be 64-bit, derived from x86-64 executables and shared libraries, which must comply with the x86-64 ABI and ELF-64 formats. Most statically compiled applications on Linux meet this requirement.

The workload runs on Linux, with a kernel version 2.6.32 or later.

• A small number of CPU intensive processes

On a given OS host where the workload is running, the workload must be comprised of one or a few CPU intensive processes. Optimizing a large number of processes at once is not recommended.

• Long running programs

The processes being optimized have long lifetimes, and their workloads are long running in order to amortize the warmup time associated with optimization.

• x86-64

Optimized processes must be 64-bit, derived from x86-64 executables and shared libraries, which must comply with the x86-64 ABI and ELF-64 formats. Most statically compiled applications on Linux meet this requirement.

• Dynamically Linked

Target processes must be dynamically linked to its shared libraries. Statically linked processes are not yet supported. Most Linux programs are dynamically linked.

• No self-modifying code

The target application must not be running its own Just-In-Time compiler such as those found in Java virtual machines. This therefore excludes Java applications.

• Front-end CPU stalls

The workload wastes a lot of time in CPU instruction cache misses, instruction TLB misses, and to a lesser extent branch mispredictions.

• User mode execution

Much of that wasted time is spent in user mode execution (as opposed to kernel mode), as Dynimize only optimizes user mode machine code.

Target processes must be dynamically linked to its shared libraries. Statically linked processes are not yet supported. Most Linux programs are dynamically linked.

• No self-modifying code

The target application must not be running its own Just-In-Time compiler such as those found in Java virtual machines. This therefore excludes Java applications.

• Front-end CPU stalls

The workload wastes a lot of time in CPU instruction cache misses, instruction TLB misses, and to a lesser extent branch mispredictions.

• User mode execution

Much of that wasted time is spent in user mode execution (as opposed to kernel mode), as Dynimize only optimizes user mode machine code.

Because of the above requirements, Dynimize takes a whitelist approach when determining if programs are allowed to be optimized, with MySQL and its variants being the currently supported optimization targets on that list, with others planned for the future. Other programs are not currently supported, and while they can be used with Dynimize, they should be very thoroughly tested by the user or system administrator before being deployed in a production environment.

Dynimize System Overhead

Dynimize optimizes running software, and so it consumes CPU and memory resources while performing these optimizations. For it to be effective, this overhead must be more than offset by the performance gained through optimization. Once optimization is complete, Dynimize consumes hardly any system resources. This overhead therefore occurs early in a program's execution.

For this reason, the longer a program runs at steady state, the greater the benefit of using Dynimize since the overhead can be amortized over that period of time. Future versions of Dynimize will eventually eliminate most of this overhead.

MySQL Sysbench OLTP throughput with and without Dynimize.

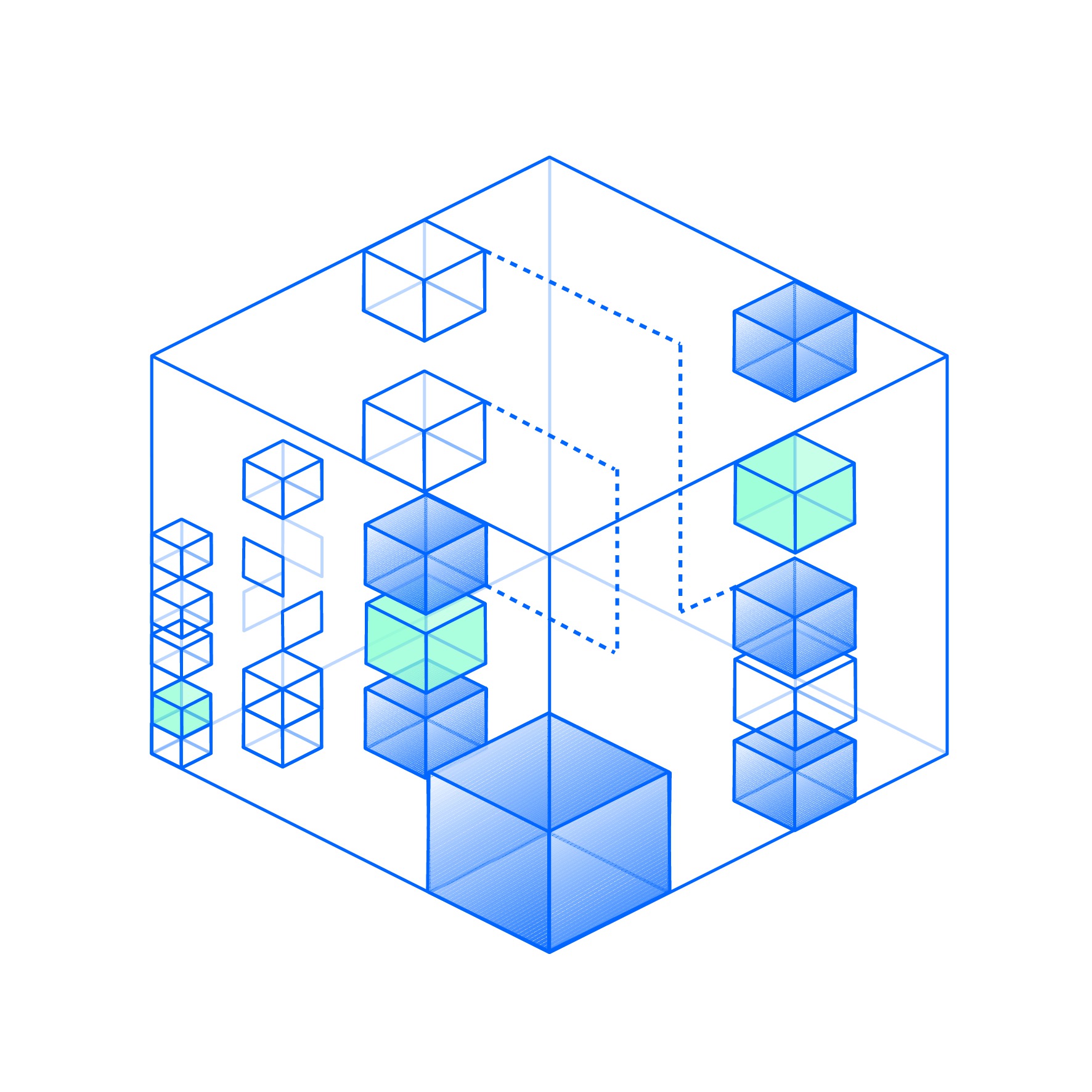

Dynimize Under The Hood

Dynimize is a user mode daemon process that is run with superuser permissions. It profiles applications using the Linux perf_events subsystem and interfaces with a target application's machine code through the Linux ptrace system call.

When optimizing a program, it loads a code cache into the target program's address space. A code cache is a memory region that will contain optimized machine code generated by Dynimize. It splices an application's machine instructions into optimization regions, converts the machine code of each region into an intermediate representation (IR), and annotates that IR with the collected profiling information. Guided by this profiling information, it performs various optimizations on the IR, such as high-level architecture independent dataflow based compiler optimizations like constant propagation and dead code elimination, as well as microarchitecture specific optimizations such as those targeting hot/cold code placement and branch reduction. The IR is then converted back to machine code and committed to the code cache.

The target program is modified such that every invocation of each optimization region in the original machine code becomes an invocation of its optimized code in the code cache. All updates to the target process are atomic so that application functionality is always maintained at any stage throughout this process.

High level illustration of how Dynimize works

Where Dynimize Helps

Dynimize accelerates high performance online-transaction-processing (OLTP) workloads for the relational database products MySQL, MariaDB, and Percona Server on Linux.

The initial release of Dynimize mostly focuses on reducing front-end CPU stalls. These are the delays encountered by a CPU when bringing in instructions from memory to its execution units. In technical jargon, they are comprised of issues such as instruction cache misses, branch prediction misses, and iTLB misses. These front-end stalls are typically a bottleneck when performing OLTP workloads that are CPU intensive. MySQL's process architecture, and the nature of optimizations that reduce front-end CPU stalls make MySQL OLTP workloads an obvious place to start with Dynimize. Other capabilities and applications will be supported in subsequent releases.